By Niamh Healy

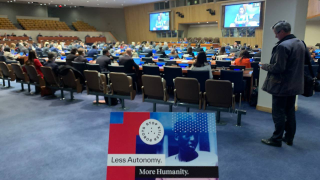

Recent controversy over an ‘exams algorithm’ in the UK has shown how the unthinking use of artificial intelligence can have serious and harmful effects on people’s lives. This context framed the launch event of the UK coalition of the Campaign to Stop Killer Robots’ Universities campaign, which was held on the 2nd of September 2020.

The Campaign to Stop Killer Robots is working to prevent one of the most extreme examples of AI’s possible hazards: the potential creation of autonomous weapons, where decisions over the use of force are made without meaningful human control. This online event brought together a core group of students from 12 universities to learn about the campaign and consider their role in the movement. The UK coalition is formed of 16 organizations working together towards the goal of UK support for a ban on autonomous weapons systems. Universities can play a key role in this eventual goal as their research may have potential applications in lethal autonomous weapons systems (LAWS). The aim of the Universities campaign is for universities to commit to the Future of Life Institute’s Lethal Autonomous Weapons pledge: to not participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons.

The event began with expert speakers from the UK coalition, Article 36, Harvard Law School and Human Rights Watch all describing their personal journeys and explaining how they had come to work on the issue of autonomy in weapons systems. A recurrent theme of these stories was the importance of collaboration and coalition-building in successful campaigning. The composition of the group of students attending appropriately reflected this, with invitees from various UK student and youth organizations including Amnesty International’s Student Action Network, Student Young Pugwash UK, the United Nations Association, and the Campaign Against Arms Trade.

The session included an introduction to LAWS. Richard Moyes from Article 36 explained that identifying and categorizing LAWS is still an ongoing process. The campaign’s focus on LAWS fits into a broader concern with where military technologies are moving and how AI will fit within current technology. Hence the campaign’s name is a deliberate choice: it is meant to startle and evoke a dramatic image of where this technology might head without active intervention. Roughly, we can understand LAWS as technology or technological systems where machines make decisions on where force is applied. What is concerning about this technology, Moyes described, is the retreat of human control into the background of these incredibly significant decisions. What does it mean for human morality if machines are making some of our most morally-sensitive decisions?

Bonnie Docherty from Harvard Law School and Human Rights Watch explained the ongoing political processes grappling with these technologies. Currently, no international legal regime regulates this technology. However, as Bonnie noted, negotiations within the Convention on Certain Conventional Weapons (CCW), are ongoing. This treaty regulates indiscriminate and inhumane weapons. Campaigners hope the CCW will negotiate a new protocol covering LAWS.

And what of universities? How can universities and their students engage? Verity Coyle from the UK coalition explained that universities are incredibly important: they train the next generation of leaders while conducting research that will shape the future of humanity. The aim of the campaign is not to shutdown research; rather: it aims to encourage universities to safeguard their own innovations. Students are encouraged to ask and investigate how research at their universities might contribute to the development of LAWS. This will not be an easy task. As Richard observed, the potential applications of relevant research are often ambiguous. Research into machine object recognition, for example, has application in autonomous driving technology but may also be used for military targeting. However, part of the campaign’s work is to establish a boundary for what is and is not acceptable. As innovators of this technology, universities should help articulate a moral position in how autonomous technologies are used and take ownership of their own discoveries. If universities, their researchers and students commit to the Future of Life Institute’s pledge, it will demonstrate that a key constituency within the UK - our current and future leaders in research and technology - reject the prospect of lethal autonomous weapons.

In the coming months, the students who attended last month's event will begin a conversation on their campuses (whether virtual or in-person) about the role their universities will play in the conversation on lethal autonomous weapons. And they will do this amongst a student population keenly aware of how unregulated AI can harm lives, even if in less extreme ways than the damage LAWS could potentially cause. Hopefully, this new constituency will help guide the UK to support a ban on lethal autonomous weapons.

Keep up to date with all latest news from the UK Campaign to Stop Killer Robots by following them on Twitter @UK_Robots.

Niamh Healy is President Of Student Young Pugwash UK. Originally published on 13th October, 2020

Photo: A robot taking part in a press briefing held by the Campaign to Stop Killer Robots. Credit:UNPhoto/Evan Schneider