The prohibition of fully autonomous weapon systems is needed to prevent a new arms race, writes Noel Sharkey

In January 2013, Ben Emmerson QC, the UN Special Rapporteur on counter-terrorism and human rights, launched an inquiry examining the impact of the rise in the use of drones and targeted killings. This timely inquiry comes at a point when automated warfare technology is advancing at a worrying rate.

We are rapidly moving along a course towards the total automation of warfare, and I believe that there is a line that must not be crossed: namely, where the decision to kill humans is delegated to machines. Weapon systems should not be allowed autonomously to select their own human targets and engage them with lethal force.

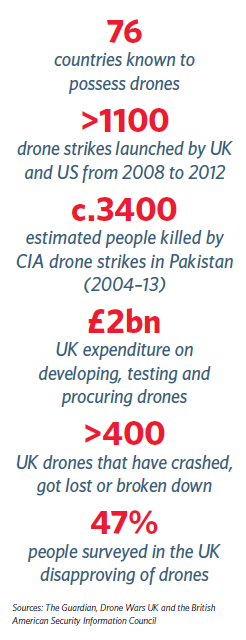

Drones have been the most controversial tool in the US ‘war on terror’. Yet despite the questionable legality of the CIA drone strikes in Pakistan and Africa, drone sales are a highly competitive international business. More than 70 countries now have the technology and sales are predicted to exceed $89bn within the next 10 years.

Drones have been the most controversial tool in the US ‘war on terror’. Yet despite the questionable legality of the CIA drone strikes in Pakistan and Africa, drone sales are a highly competitive international business. More than 70 countries now have the technology and sales are predicted to exceed $89bn within the next 10 years.

Companies hungry to increase their share of the market are turning their attention to new developments such as autonomous operation – i.e. robots that do not require direct human oversight. This has been high on the US military agenda since 2004 for a number of reasons.

Remotely controlled drones are currently only used against low-tech communities in countries where permission to use the air space has been granted. More sophisticated, and less permissive, opponents could adopt counter strategies that would render drones useless by jamming communication signals. But a fully autonomous drone could seek out its target without having to communicate with an operator. The use of autonomous drones is also likely to reduce military costs and the number of personnel required, and to improve operation by stripping out human error and responsetime limitations, allowing for sharp turns and manoeuvres at hypersonic speeds.

These developments are well under way. UK defence and aerospace company BAE Systems will be testing its Taranis intercontinental autonomous combat aircraft demonstrator this spring. The Chinese Shenyang Aircraft Corporation is working on an unmanned supersonic fighter aircraft, the first drone designed for aerial dogfights. And the US has tested Boeing’s Phantom Ray and Northrop Grumman’s X-47B supersonic drones, which are due to appear on US aircraft carriers in the Pacific around 2019.

The US programme has also tested an unmanned combat aircraft that can travel 13,000 mph (20,921.5 kph). The aim is to be able to reach anywhere on the planet within 60 minutes.

These programmes are paving the way for very powerful, effective and flexible killing machines, well outside the speed of plausible human intervention, that are rapidly – and drastically – changing the nature of combat. The resultant impact on international humanitarian law could be problematic.

How, for instance, can the Geneva Conventions be applied by machines that cannot distinguish between civilians and combatants, and that cannot determine what constitutes proportionate force? Future technological improvements are highly unlikely to yield great advances in the sort of judgment and intuitive reasoning that humans employ on the battlefield.

How, for instance, can the Geneva Conventions be applied by machines that cannot distinguish between civilians and combatants, and that cannot determine what constitutes proportionate force? Future technological improvements are highly unlikely to yield great advances in the sort of judgment and intuitive reasoning that humans employ on the battlefield.

In November 2012, Human Rights Watch and the Harvard Law Clinic published recommendations urging all states to adopt national and international laws to prohibit the development, production and use of fully autonomous weapons. Three days later, the US Department of Defense issued a directive on “autonomy in weapons systems” that “once activated, can select and engage targets without further intervention by a human operator”.

It gives developers the green light by assuring us that all such weapons will be tested thoroughly from development to employment to ensure that all applicable laws are followed. But I have serious issues with anyone’s ability to verify such computer systems. Moreover, if another country were to deploy such weapons, would the US really let them have the military advantage and not deploy theirs, thoroughly tested or not?

The US directive repeatedly stresses the establishment of guidelines to minimise the probability of failures that could lead to unintended engagement or loss of control. But the list of possible failures is long and includes: “human error, human-machine interaction failures, software coding errors, malfunctions, communications degradation, enemy cyber-attacks, countermeasures or actions, or unanticipated situations on the battlefield.”

This list points to a problem with the whole enterprise. How can researchers possibly minimise the risk of unanticipated situations on the battlefield? How can a system be fully tested against adaptive, unpredictable enemies?

The US directive presents a blinkered outlook which appears to ignore that their robots are likely to encounter similar technology from other sophisticated powers. If two or more machines with unknown programmes encounter each other, the outcome is unpredictable and could create unforeseeable harm to civilians.

It seems that an international treaty banning fully autonomous robot weapon systems is the only rational approach. We need to act now before too many countries and military contractors put large investments into the development of these systems, and before there is an arms race from which we cannot return.

Noel Sharkey is Professor of Artificial Intelligence and Robotics and Professor of Public Engagement at the University of Sheffield